August 2023

The Equal Employment Opportunity Commission (EEOC) settled a lawsuit with iTutorGroup for $365,000 over AI-driven age discrimination, which is the first settlement against AI-powered recruitment tools in the US. The iTutorGroup used an algorithm in 2020 that automatically rejected older applicants due to their age, violating the Age Discrimination Act. The settlement prohibits the iTutorGroup from automatically rejecting tutors over 40 or anyone based on their sex and is expected to comply with all relevant non-discrimination laws. HR Tech tools are likely to face more lawsuits targeting automated employment decision tools across the US.

There is a push to specifically regulate the use of HR tech for employment decisions due to the potential for algorithms trained on biased data to perpetuate bias and have an even greater impact than human prejudices. Algorithmic assessment tools can be more complicated to validate, potentially making it more difficult to justify the tool’s use. Algorithms can reduce the explainability of hiring decisions, so disclosure to applicants on the use of automated tools and how they make decisions may be necessary. Well-crafted laws for HR tech can mandate disclosures to applicants, minimise bias through auditing, and require proper validation of these automated systems, complementing broader anti-discrimination laws. Policymakers worldwide are increasingly targeting HR tech for regulation.

July 2023

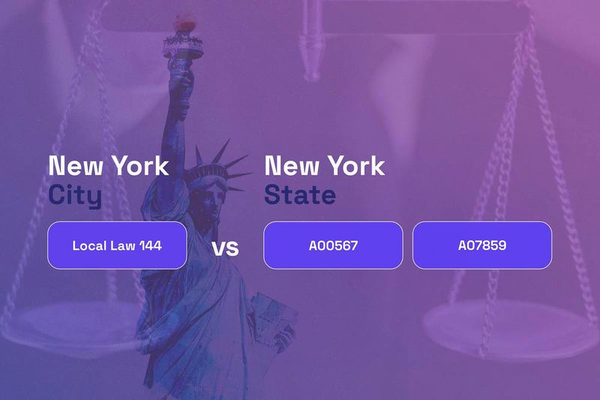

New York is taking the lead in regulating automated employment decision tools (AEDTs), proposing three laws at both state and local levels to increase transparency and safety around their use in hiring. These laws seek to impose requirements such as bias audits, notification of AEDT use, and disparate impact analysis. AEDTs are defined as a computation process that uses machine learning, statistical modelling, data analytics, or artificial intelligence to issue a simplified output that is used to substantially assist or replace discretionary decision making for employment decisions. The use of such tools has been fueled by the pandemic and can reduce the time to fill open positions, improve candidate experience, and increase diversity. However, there have been instances of misuse and inappropriate development of these tools, resulting in high-profile scandals and lawsuits.

States in the US are introducing legislation to regulate HR Tech, with New York proposing legislation targeting automated employment decision tools (AEDTs), and California proposing multiple pieces of legislation. Federal efforts have now emerged, with Senators introducing the No Robot Bosses Act and Exploitative Workplace Surveillance and Technologies Task Force Act. The No Robot Bosses Act seeks to protect job applicants and employees from the undisclosed use of automated decision systems, requiring employers to provide notice of when and how the systems are used. The Exploitative Workplace Surveillance and Technologies Task Force Act seeks to create an interagency task force to lead a whole government study and report to Congress on workplace surveillance. The Biden-Harris administration have secured voluntary agreements from various AI companies to ensure products are safe and that public trust is built.

The HR industry is facing challenges in ensuring consistent compliance with an expanding and fluctuating set of regulations, particularly in relation to the use of AI and automated decision-making tools. Two key pieces of legislation are New York City's Local Law 144 and the EU AI Act, which both aim to ensure fairness, transparency and accountability in their respective jurisdictions. Local Law 144 applies specifically to employers or employment agencies using automated employment decision tools, while the AI Act covers a broad range of AI systems and will apply to providers of AI systems established within the European Union or used within it. Both laws have associated penalties for non-compliance, including financial penalties and reputational and operational risks. Holistic AI offers expertise in AI auditing to help organisations navigate these complexities. Applicants should note that this blog article does not offer legal advice or opinion.