August 2023

03 Aug 2023

AI governance refers to the rules and frameworks that ensure the responsible use of AI. It is necessary to mitigate legal, financial, and reputational risks and to promote trust in AI technologies. Effective AI governance involves a multi-layered approach, ranging from organizational structure to regulatory alignment, and it requires the involvement of everyone in an organization. AI governance measures and metrics, such as transparency, bias detection and mitigation, and impact on stakeholders, should be regularly assessed and improved. Effective AI governance offers benefits such as preventing AI harm, meeting legal and regulatory requirements, and promoting scalability and transparency. Holistic AI offers solutions to implement responsible AI governance through independent AI audits, risk assessments, and inventory management.

There is a push to specifically regulate the use of HR tech for employment decisions due to the potential for algorithms trained on biased data to perpetuate bias and have an even greater impact than human prejudices. Algorithmic assessment tools can be more complicated to validate, potentially making it more difficult to justify the tool’s use. Algorithms can reduce the explainability of hiring decisions, so disclosure to applicants on the use of automated tools and how they make decisions may be necessary. Well-crafted laws for HR tech can mandate disclosures to applicants, minimise bias through auditing, and require proper validation of these automated systems, complementing broader anti-discrimination laws. Policymakers worldwide are increasingly targeting HR tech for regulation.

July 2023

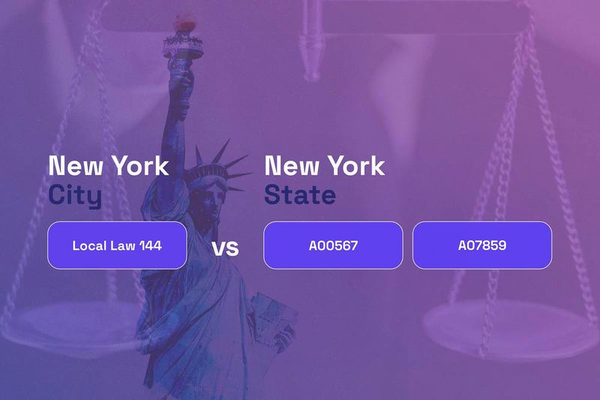

New York is taking the lead in regulating automated employment decision tools (AEDTs), proposing three laws at both state and local levels to increase transparency and safety around their use in hiring. These laws seek to impose requirements such as bias audits, notification of AEDT use, and disparate impact analysis. AEDTs are defined as a computation process that uses machine learning, statistical modelling, data analytics, or artificial intelligence to issue a simplified output that is used to substantially assist or replace discretionary decision making for employment decisions. The use of such tools has been fueled by the pandemic and can reduce the time to fill open positions, improve candidate experience, and increase diversity. However, there have been instances of misuse and inappropriate development of these tools, resulting in high-profile scandals and lawsuits.

States in the US are introducing legislation to regulate HR Tech, with New York proposing legislation targeting automated employment decision tools (AEDTs), and California proposing multiple pieces of legislation. Federal efforts have now emerged, with Senators introducing the No Robot Bosses Act and Exploitative Workplace Surveillance and Technologies Task Force Act. The No Robot Bosses Act seeks to protect job applicants and employees from the undisclosed use of automated decision systems, requiring employers to provide notice of when and how the systems are used. The Exploitative Workplace Surveillance and Technologies Task Force Act seeks to create an interagency task force to lead a whole government study and report to Congress on workplace surveillance. The Biden-Harris administration have secured voluntary agreements from various AI companies to ensure products are safe and that public trust is built.

The development and establishment of artificial intelligence (AI) standards has become a pressing necessity as the ecosystem of AI rapidly evolves. Standards act as common guidelines, principles and technical specifications for the development, deployment and governance of AI systems. Technical standards in AI governance encompass foundational, process, measurement, and performance standards. Adopting standards enables organizations to benchmark, audit, and assess AI systems, ensuring conformity and performance evaluation, benefiting developers, consumers, and data subjects impacted by AI technologies. Standards bodies, such as the International Organisation for Standardisation (ISO) and the International Electrotechnical Commission (IEC), facilitate the development of consensus-driven standards through multi-stakeholder deliberations, promoting global and regional harmonisation.