October 2022

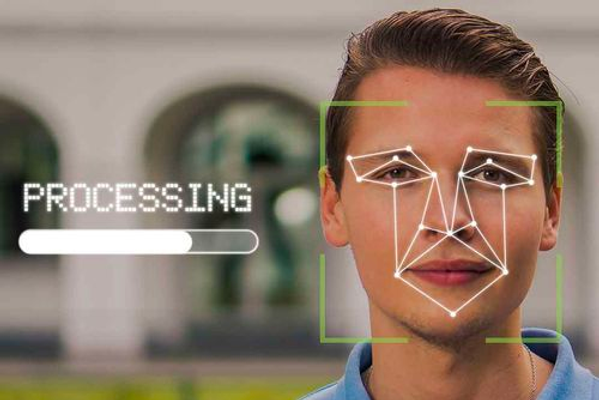

The increasing use of remote identity verification (IDV) technology has created new risks and ethical implications, including barriers to participation in banking and time-critical products such as access to credit. Machine learning (ML) models enable IDV by extracting relevant data from the identity document and validating the original document, then performing facial verification between the photo presented in the identity document and the selfie taken within the IDV app. However, the quality of the datasets used to train the ML models can lead to algorithmic bias and inaccuracies, which can result in individuals being unfairly treated. Managing the potential risks of AI bias in IDV requires technical assessment of the AI system’s code and data, independent auditing, testing, and review against bias metrics, and establishing policies and processes to govern the use of AI.

August 2022

Facial recognition technology is widely used, but also controversial and high-risk due to potential biases, privacy concerns, safety issues, and lack of transparency. Some policymakers have banned facial recognition, while others require compliance with data protection laws. Risk management strategies can help to mitigate these risks, including examining the training data for representativeness and auditing for bias, implementing data management strategies and fail-safes, establishing safeguards to prevent malicious use, and ensuring appropriate disclosure of the use of technology.